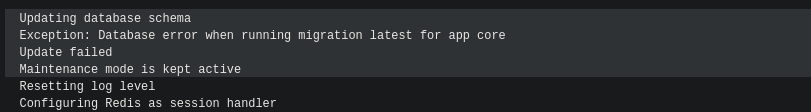

And again… a Nextcloud upgrade failed. After a docker-compose pull and docker-compose up -d the maintenance mode won’t turn off. So I tried to turn it off manually, but I still couldn’t finish the update via WebGUI. I also couldn’t find any errors via the log.

docker exec --user www-data nextcloud-app php /var/www/html/occ maintenance:mode --off

So I triggered the upgrade again from the terminal and finally got an exception.

$ docker exec --user www-data nextcloud-app_1 php /var/www/html/occ upgrade

Nextcloud or one of the apps require upgrade - only a limited number of commands are available

You may use your browser or the occ upgrade command to do the upgrade

Setting log level to debug

Turned on maintenance mode

Updating database schema

Updated database

Updating <user_ldap> ...

An unhandled exception has been thrown:

Error: Call to undefined method OC\DB\QueryBuilder\QueryBuilder::executeQuery() in /var/www/html/apps/user_ldap/lib/Migration/GroupMappingMigration.php:56

Stack trace:

#0 /var/www/html/apps/user_ldap/lib/Migration/Version1130Date20220110154717.php(54): OCA\User_LDAP\Migration\GroupMappingMigration->copyGroupMappingData('ldap_group_mapp...', 'ldap_group_mapp...')

#1 /var/www/html/lib/private/DB/MigrationService.php(528): OCA\User_LDAP\Migration\Version1130Date20220110154717->preSchemaChange(Object(OC\Migration\SimpleOutput), Object(Closure), Array)

#2 /var/www/html/lib/private/DB/MigrationService.php(426): OC\DB\MigrationService->executeStep('1130Date2022011...', false)

#3 /var/www/html/lib/private/legacy/OC_App.php(1012): OC\DB\MigrationService->migrate()

#4 /var/www/html/lib/private/Updater.php(347): OC_App::updateApp('user_ldap')

#5 /var/www/html/lib/private/Updater.php(262): OC\Updater->doAppUpgrade()

#6 /var/www/html/lib/private/Updater.php(134): OC\Updater->doUpgrade('21.0.8.3', '21.0.7.0')

#7 /var/www/html/core/Command/Upgrade.php(249): OC\Updater->upgrade()

#8 /var/www/html/3rdparty/symfony/console/Command/Command.php(255): OC\Core\Command\Upgrade->execute(Object(Symfony\Component\Console\Input\ArgvInput), Object(Symfony\Component\Console\Output\ConsoleOutput))

#9 /var/www/html/3rdparty/symfony/console/Application.php(1009): Symfony\Component\Console\Command\Command->run(Object(Symfony\Component\Console\Input\ArgvInput), Object(Symfony\Component\Console\Output\ConsoleOutput))

#10 /var/www/html/3rdparty/symfony/console/Application.php(273): Symfony\Component\Console\Application->doRunCommand(Object(OC\Core\Command\Upgrade), Object(Symfony\Component\Console\Input\ArgvInput), Object(Symfony\Component\Console\Output\ConsoleOutput))

#11 /var/www/html/3rdparty/symfony/console/Application.php(149): Symfony\Component\Console\Application->doRun(Object(Symfony\Component\Console\Input\ArgvInput), Object(Symfony\Component\Console\Output\ConsoleOutput))

#12 /var/www/html/lib/private/Console/Application.php(215): Symfony\Component\Console\Application->run(Object(Symfony\Component\Console\Input\ArgvInput), Object(Symfony\Component\Console\Output\ConsoleOutput))

#13 /var/www/html/console.php(100): OC\Console\Application->run()

#14 /var/www/html/occ(11): require_once('/var/www/html/c...')

Quick search on google and it seems that the image for 21.0.8 is broken and already withdrawn. Here and here. Great if users can still pull it from the docker repo…

I disabled the user_ldap addon via command line. This site helped me finding the right commands. After another occ upgrade and a few minutes, the instance finally came back online.

docker exec --user www-data nextcloud-app php /var/www/html/occ app:list

docker exec --user www-data nextcloud-app php /var/www/html/occ app:disable user_ldap

docker exec --user www-data nextcloud-app php /var/www/html/occ maintenance:mode --off

docker exec --user www-data nextcloud-app php /var/www/html/occ upgrade

In the end I also stumbled across the official nextcloud blog, where they announce 20.0.9 and that they had problems with 20.0.8…. But if the new docker image is not yet provided, and you can’t downgrade from your broken 20.0.8 back to 20.0.7, this doesn’t help you at all.