“Western Digital macht künftig besser erkenntlich, welche WD-Red-Festplatten auf klassisches Conventional Magnetic Recording (CMR) oder potenziell langsameres Shingled Magnetic Recording (SMR) setzen: Der Hersteller überführt alle CMR-Modelle der WD-Red-Serie in die neue Baureihe WD Red Plus. Wer künftig eine normale WD-Red-Festplatte ohne Namenszusatz kauft, bekommt folglich sicher eine SMR-Variante.”

CMR = Conventional Magnetic Recording

SMR = Shingled Magnetic Recording (ungeeignet für NAS)

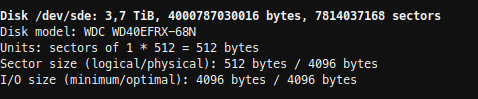

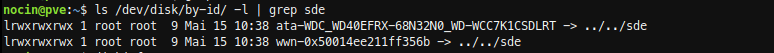

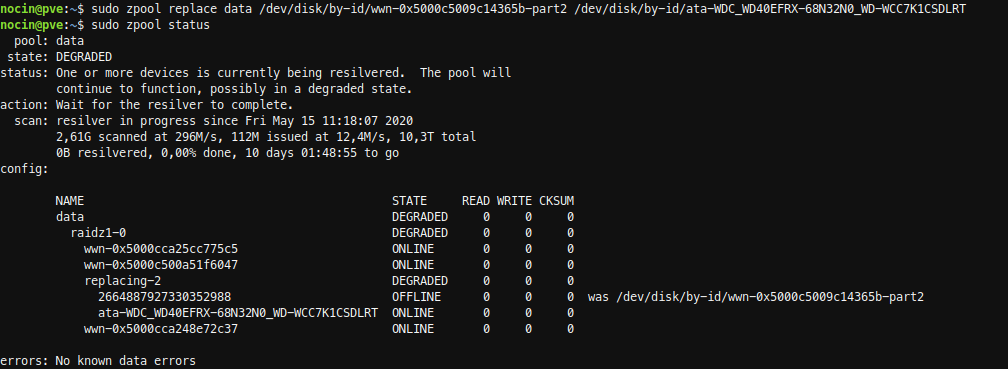

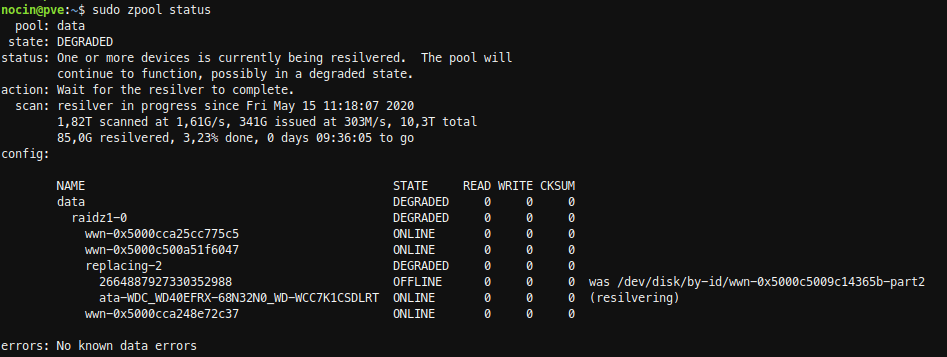

CMR Produktbezeichnungen: WD10EFRX (1 TB), WD20EFRX (2 TB) , WD30EFRX (3 TB), WD40EFRX (4 TB), WD60EFRX (6 TB), WD80EFAX (8 TB), WD101EFAX (10 TB), WD120EFAX (12 TB), WD140EFAX (14 TB)

SMR Produktbezeichnungen: WD20EFAX (2 TB), WD30EFAX (3 TB), WD40EFAX (4 TB) und WD60EFAX (6 TB)