https://pve.proxmox.com/wiki/Unprivileged_LXC_containers

https://www.reddit.com/r/Proxmox/comments/jz5ugx/lxc_user_mapping_help/

I had to map my lxc user nocin (uid=1000(nocin) gid=1000(nocin)) to user nocin (uid=1000(nocin) gid=1000(nocin)) on the host. So they have the same uid and gid on the host and inside the container and I had to map 1000 to 1000.

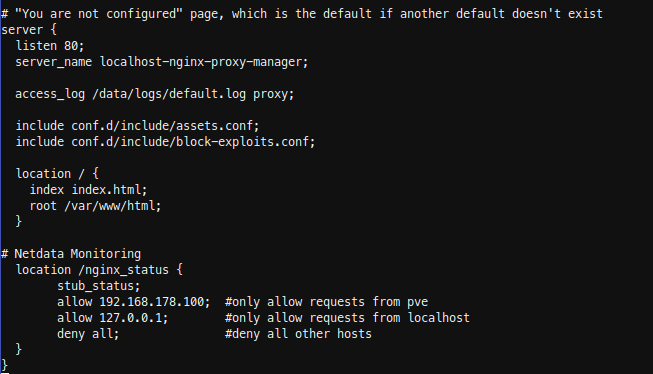

$ nano /etc/pve/lxc/114.conf

# had to append these lines

lxc.idmap: u 0 100000 1000

lxc.idmap: g 0 100000 1000

lxc.idmap: u 1000 1000 1

lxc.idmap: g 1000 1000 1

lxc.idmap: u 1001 101001 64535

lxc.idmap: g 1001 101001 64535

Also append the following line to /etc/subuid and /etc/subgid.

root:1000:1

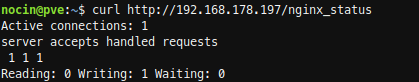

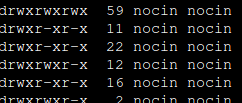

Now all mount points are fully accessible and not owned by “Nobody/NoGroup” anymore.

If you are not able to access your home directory inside your container after the user mapping, you can change the permissions for it directly from the host. Find your lxc directory on your host and update the permissions to your current uid and gid.

$ cd /rpool/data/subvol-114-disk-0/home/

$ chown 1000:1000 -R nocin/

$ ls -l

drwxr-x---+ 5 nocin nocin 9 Mai 16 11:22 nocin