Handy cli tool to monitor your applications and resource usage.

[CAP] Fiori Elements – Add button in table toolbar to trigger action

A button for calling an action or function can be added to a table header with a single line in the annotations.cds file:

UI.LineItem : [

{ $Type: 'UI.DataFieldForAction', Action: 'myService.EntityContainer/myAction', Label: 'This is my button label' },

...

],

[SuccessFactors] OData V2 – filter in (VSC Rest-API Client)

When filtering an OData V2 endpoint, you can simply list your values separated by a comma after the keyword in (option 2). Much shorter than having to repeat your filter statement all the time, like in option 1.

@user1=10010

@user2=10020

### Option 1: Filter userId using OR condition

GET {{$dotenv api_url}}/odata/v2/User?$filter=userId eq '{{user1}}' or userId eq '{{user2}}'

Authorization: Basic {{$dotenv api_auth}}

Accept: application/json

### Option 2: Filter userId using IN condition

GET {{$dotenv api_url}}/odata/v2/User?$filter=userId in '{{user1}}', '{{user2}}'

Authorization: Basic {{$dotenv api_auth}}

Accept: application/json

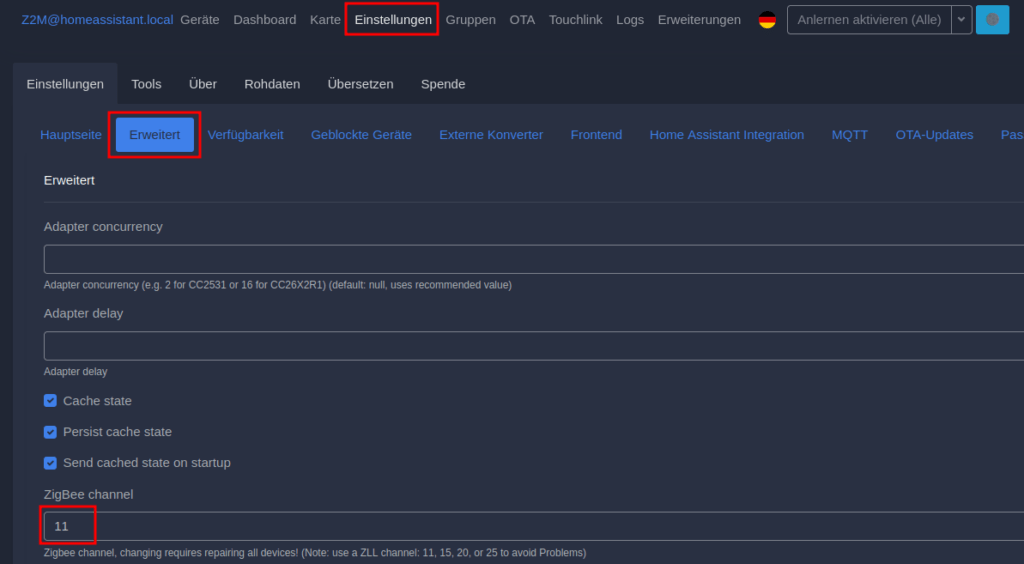

[Home Assistant] Zigbee2MQTT – Check current used Channel

In Zigbee2MQTT, go to Settings, Advanced, ZigBee Channel.

[CAP] Create value help with distinct values

using my.Model as db from '../db/data-model';

service myService @(requires: 'authenticated-user') {

@readonly

@cds.odata.valuelist

entity uniqueValues as select from db.Table distinct {

key field

};

}

[CAP] Update standalone approuter

You can simply update your standalone approuter using cf push,

cd ~/projects/bookshop/app/approuter/

cf push bookshop-approuter # take your approuter name from the mta.yaml file

cd ~/projects/bookshop/

[nodejs] workspaces

If you have subdirectories or additional applications which have its own package.json file, you can add them via the workspaces setting to your main project.

{

"name": "my-project",

"workspaces": [

"./app/*"

]

}

When running npm install it will now also install the dependencies of all projects in the app folder.

[SAP] Set default download path in SU01

SU01 -> Edit user -> Parameter -> Add Parameter GR8 and your preferred path -> Save

[SAP] Reset different SAP buffers

https://wiki.scn.sap.com/wiki/display/Basis/How+to+Reset+different+SAP+buffers

/$SYNC - Resets the buffers of the application server

/$CUA - Resets the CUA buffer of the application server

/$TAB - Resets the TABLE buffers of the application server

/$NAM - Resets the nametab buffer of the application server

/$DYN - Resets the screen buffer of the application server

/$ESM - Resets the Exp./ Imp. Shared Memory Buffer of the application server

/$PXA - Resets the Program (PXA) Buffer of the application server.

/$OBJ - Resets the Shared Buffer of the application server.

[ABAP] convert string to xstring and convert back

DATA(lv_string) = |My string I want to convert to xstring.|.

TRY.

DATA(lv_xstring) = cl_abap_codepage=>convert_to( lv_string ).

DATA(lv_string_decoded) = cl_abap_codepage=>convert_from( lv_xstring ).

WRITE: / lv_string,

/ lv_xstring,

/ lv_string_decoded.

CATCH cx_root INTO DATA(e).

WRITE: / e->get_text( ).

ENDTRY.